Table of Contents

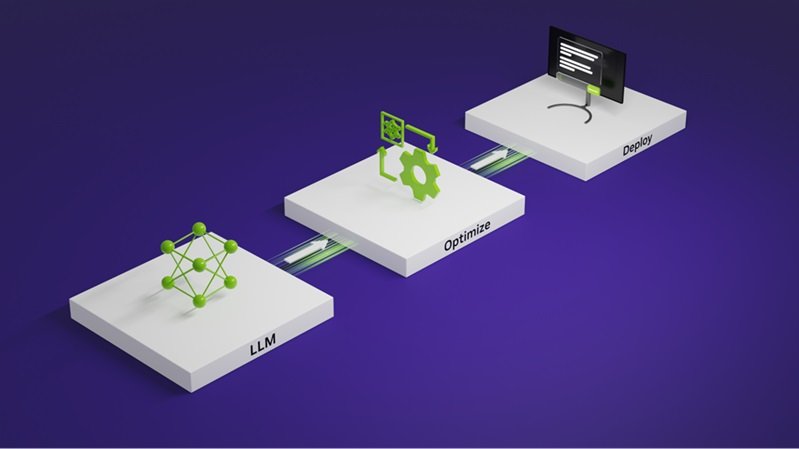

Introduction – LLM Optimization

In the world of artificial intelligence, Large Language Models (LLMs) like GPT-4 have become game-changers, revolutionizing everything from chatbots to automated content creation. But, like any powerful tool, they require fine-tuning to perform optimally. If you’re an IT engineer diving into LLMs, understanding optimization techniques is crucial to get the most out of these models. Let’s explore these techniques in detail, with real-life examples to help you apply them effectively.

Understanding the Basics of LLMs

Before we delve into optimization techniques, let’s quickly recap what LLMs are. Large Language Models are deep learning models trained on massive datasets of text. They learn to predict and generate human-like text by analyzing patterns, context, and semantics. While powerful out of the box, LLMs need to be optimized to meet specific requirements like reducing inference time, improving accuracy, or adapting to a particular domain.

1. Fine-Tuning: Tailoring the Model to Your Needs

One of the most effective optimization techniques is fine-tuning. Fine-tuning involves training a pre-existing LLM on a smaller, domain-specific dataset. This allows the model to adapt its general knowledge to a particular context.

Example: Imagine you’re working on a customer service chatbot for a healthcare company. A generic LLM might not know the intricacies of medical terminologies. By fine-tuning the model with a dataset of healthcare-related queries and responses, you can significantly improve its accuracy and relevance in this domain.

2. Parameter Pruning: Trimming the Fat

Large models often contain billions of parameters, not all of which are necessary for a specific task. Parameter pruning involves removing the less important parameters, which reduces the model’s size and improves efficiency without sacrificing too much accuracy.

Example: If you’re deploying an LLM on a mobile app where resources are limited, you might prune unnecessary parameters to make the model lighter and faster, ensuring it runs smoothly on devices with lower processing power.

3. Quantization: Reducing Model Precision

Quantization is another technique to optimize LLMs by reducing the precision of the model’s weights. Instead of using 32-bit floating-point numbers, quantization converts them into lower precision, such as 8-bit integers. This leads to faster computation and lower memory usage.

Example: A real-world application of quantization can be seen in AI-powered voice assistants. These assistants need to respond quickly and accurately on devices with limited computational resources. Quantizing the LLM behind the voice assistant ensures it can process requests efficiently, even on less powerful hardware.

4. Knowledge Distillation: Creating a Student Model

Knowledge distillation involves training a smaller, more efficient model (the student) to mimic the behavior of a larger model (the teacher). The student model learns to approximate the outputs of the teacher model but with fewer parameters, making it faster and less resource-intensive.

Example: In a scenario where you’re developing an AI tool for real-time translation, using the full version of an LLM might be too slow. By applying knowledge distillation, you can create a smaller model that provides near-identical translations in a fraction of the time, improving user experience.

5. Transfer Learning: Leveraging Pre-Trained Models

Transfer learning is a technique where you take a model trained on one task and adapt it for another related task. This approach is particularly useful when you have limited data for the new task but can leverage the knowledge the model has already gained.

Example: Suppose you’re building an LLM for sentiment analysis in social media posts. You could start with a model pre-trained on general text data and then fine-tune it using a smaller dataset of annotated social media posts. This method accelerates the training process and enhances the model’s accuracy in the new domain.

6. Inference Optimization: Speeding Up Predictions

Inference optimization focuses on reducing the time it takes for an LLM to generate predictions. Techniques like batching, where multiple inputs are processed simultaneously, and using optimized hardware like GPUs and TPUs, can significantly speed up inference.

Example: If you’re deploying an LLM in an online recommendation system, where speed is critical, optimizing inference can make the difference between a responsive system and one that frustrates users with delays.

7. Adaptive Computation Time (ACT): Dynamic Resource Allocation

Adaptive Computation Time (ACT) allows an LLM to dynamically adjust the computational effort based on the complexity of the input. For simpler tasks, the model can process the input faster, saving resources.

Example: Consider an AI writing assistant that helps users draft emails. For straightforward sentences, the model can quickly generate suggestions, but for more complex requests, it may take a bit longer, ensuring the right balance between speed and accuracy.

Conclusion: LLM Optimization

LLM Optimization is not just about squeezing more performance out of them; it’s about making them more relevant, efficient, and aligned with the specific needs of your application. Whether you’re fine-tuning a model for a specialized task, pruning parameters for a lightweight deployment, or applying knowledge distillation for faster inference, these techniques empower you to harness the full potential of LLMs.

In the fast-paced world of IT, where the demand for AI-driven solutions is growing rapidly, understanding and applying these LLM optimization techniques can set you apart as an engineer who not only knows how to use cutting-edge technology but also how to refine it to perfection.

So, what’s your next LLM project? Think about how these optimization techniques can help you achieve your goals more efficiently and effectively.

Happy LLM optimization!

Leave a Reply