Table of Contents

When we think about artificial intelligence (AI) and natural language processing (NLP), the conversation often revolves around massive, powerful models like OpenAI’s GPT-4 or Google’s Gemini. These giants have taken the spotlight with their impressive abilities to understand and generate human-like text. But today, let’s shift our focus to the often overlooked yet incredibly valuable small language models. These smaller models are the unsung heroes, working quietly in the background, making AI accessible and efficient for many practical applications.

What Are Small Language Models?

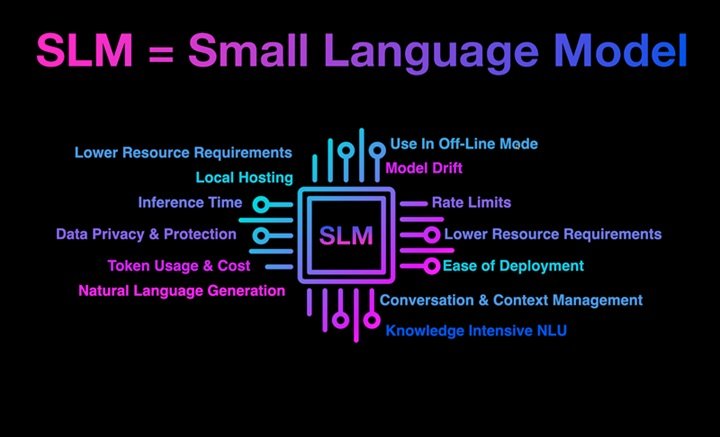

Small language models are AI systems designed to process and generate text, but with fewer parameters compared to their larger counterparts. While a model like GPT-4 has hundreds of billions of parameters, small language models might have only a few million or even less. Despite their smaller size, these models can perform remarkably well on various tasks, especially when resources are limited.

The Beauty of Simplicity

One of the biggest advantages of small language models is their efficiency. They require less computational power, which means they can be run on devices with limited hardware, such as smartphones or embedded systems. This makes them ideal for applications where speed and resource conservation are crucial.

Real-Life Example: Mobile Applications

Consider a mobile app that offers real-time language translation. Using a small language model, the app can quickly translate spoken language into text and then into another language, all on your phone without needing a constant internet connection. This efficiency is not just convenient; it’s essential for travelers who might not always have access to fast internet.

Accessibility and Democratization of AI

Another significant benefit of small language models is their role in making AI more accessible. Because they are less resource-intensive, they lower the barrier to entry for individuals and small businesses that cannot afford the hefty costs associated with running large models.

Real-Life Example: Small Businesses

Imagine a small e-commerce business that wants to implement a chatbot for customer service. A large language model might be overkill and prohibitively expensive to deploy and maintain. Instead, a small language model can be integrated into the website to handle customer queries, recommend products, and provide support, all without breaking the bank.

Performance with Pragmatism

While it’s true that small language models might not match the sheer power of the largest models in generating human-like text or understanding complex queries, they are often sufficient for many practical tasks. Their performance is often good enough for real-world applications where the perfect answer isn’t necessary, just a useful one.

Real-Life Example: Email Categorization

Consider the task of sorting emails into different categories (e.g., work, personal, promotions). A small language model can be trained to recognize patterns and keywords in emails to automatically sort them. This functionality can be integrated into email clients, providing users with a cleaner, more organized inbox without needing the latest supercomputer.

Small Models, Big Impact

Small language models also shine in educational settings. They can be used to develop personalized learning tools that adapt to the pace and level of individual students, providing feedback and assistance without the need for an internet connection or powerful servers.

Real-Life Example: Educational Tools

In remote or underserved areas where internet access and advanced hardware are limited, educational tools powered by small language models can offer significant benefits. These tools can help students learn languages, mathematics, and other subjects by providing instant feedback and personalized lessons, making quality education more accessible to everyone.

Conclusion

In a world dominated by headlines about the biggest and most powerful AI models, small language models offer a refreshing perspective on what AI can achieve with fewer resources. They are the workhorses that bring AI into everyday applications, making it accessible, efficient, and practical. From mobile apps and small businesses to educational tools, these models prove that sometimes, less truly is more.

So, the next time you marvel at a sophisticated AI-driven service on your phone or appreciate the efficiency of an automated tool, remember that it might just be a small language model working diligently behind the scenes.

Frequently Asked Questions About on Small Language Models:

What is a SLM vs LLM?

SLM (Small Language Model) refers to an AI model with fewer parameters, designed for efficiency and lower computational requirements. LLM (Large Language Model) refers to an AI model with a vast number of parameters, designed for greater accuracy and more complex language tasks. The key difference is in their size, computational demands, and application scope.

What is LLM and GPT?

LLM (Large Language Model) is a category of language models that includes massive models like GPT (Generative Pre-trained Transformer). GPT models, such as GPT-3 and GPT-4, are examples of LLMs known for their advanced natural language understanding and generation capabilities. They are pre-trained on vast amounts of data to perform a wide range of language tasks.

What is SLM in AI?

SLM (Small Language Model) in AI refers to a language model that has a relatively small number of parameters compared to large models like GPT-4. SLMs are designed to be efficient, requiring less computational power and memory, making them suitable for applications on devices with limited resources.

What is an example of a small language model?

An example of a small language model is the DistilBERT model, which is a distilled version of BERT (Bidirectional Encoder Representations from Transformers). DistilBERT has fewer parameters than the original BERT model but retains most of its performance, making it more efficient and faster.

What is the future of small language models?

The future of small language models looks promising as they continue to make AI more accessible and efficient. Advances in model compression and optimization techniques will likely improve their performance. They will play a crucial role in edge computing, mobile applications, and scenarios where computational resources are limited.

How to build a small language model?

Building a small language model involves several steps:

- Data Collection: Gather and preprocess a relevant dataset.

- Model Selection: Choose a suitable architecture, such as a smaller version of BERT or GPT.

- Training: Train the model using efficient algorithms and techniques to optimize performance while keeping the model small.

- Fine-tuning: Fine-tune the model on specific tasks to improve its accuracy and applicability.

Why are smaller models better?

Smaller models are better in situations where efficiency, speed, and lower resource consumption are essential. They are ideal for deployment on devices with limited computational power, such as smartphones and IoT devices. Smaller models also reduce the cost of development and maintenance, making AI technology more accessible.

What are the four models of language?

The four models of language typically refer to:

- Statistical Language Models: Based on probability distributions over sequences of words.

- Rule-Based Language Models: Use handcrafted rules to interpret and generate text.

- Neural Language Models: Use neural networks to model language, such as LSTMs and Transformers.

- Hybrid Models: Combine elements of statistical, rule-based, and neural models to leverage the strengths of each approach.

How to run small language models?

Running small language models involves the following steps:

- Environment Setup: Install necessary libraries and frameworks (e.g., TensorFlow, PyTorch).

- Load the Model: Load the pre-trained small language model.

- Inference: Use the model to perform tasks such as text generation, translation, or classification.

- Optimization: Optimize the runtime environment to ensure efficient performance.

How many parameters is a small language model?

The number of parameters in a small language model can vary, but it typically ranges from a few million to tens of millions. For instance, DistilBERT has approximately 66 million parameters, compared to BERT’s 110 million parameters.

What is the use of small language models?

Small language models are used in various applications where efficiency is crucial, such as:

- Mobile applications (e.g., virtual assistants, language translation)

- Chatbots for customer service

- Educational tools for personalized learning

- Embedded systems in IoT devices

How big are small language models?

Small language models are significantly smaller than their large counterparts, usually ranging from a few megabytes to a few hundred megabytes in size. This compact size allows them to be deployed on devices with limited storage and computational resources.

1 Pingback