Table of Contents

In the fast-paced world of deep learning, finding efficient ways to train and deploy large models is crucial. One tool that has been making waves in this space is DeepSpeed. If you’re new to this, don’t worry—let’s dive into what DeepSpeed is, why it’s important, and how it’s transforming the landscape of AI.

What is DeepSpeed?

DeepSpeed is an open-source deep learning optimization library developed by Microsoft. It aims to scale up the training of large-scale models, making it faster, cheaper, and more efficient. DeepSpeed is designed to handle the most demanding AI tasks, allowing researchers and developers to push the boundaries of what’s possible with AI.

Why is DeepSpeed Important?

In the realm of AI, the size of models and datasets is growing exponentially. Training these massive models can be extremely resource-intensive, often requiring days or even weeks on high-performance computing clusters. DeepSpeed addresses this challenge by optimizing memory usage and improving training speeds, making it feasible to train larger models without breaking the bank.

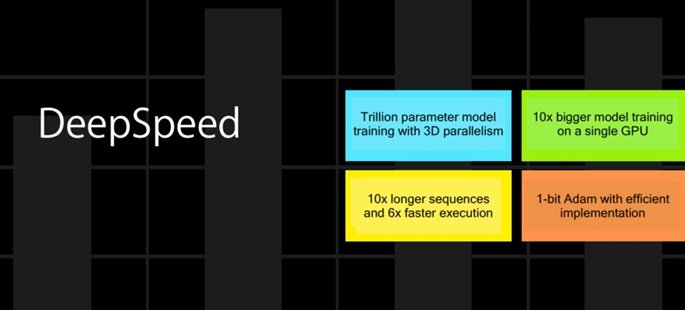

Key Features of DeepSpeed

- ZeRO (Zero Redundancy Optimizer):

- What it does: ZeRO is a novel optimization technique that reduces memory redundancy, enabling efficient training of very large models.

- Real-life example: Imagine training a language model like GPT-3. Without optimization, this process would require enormous amounts of memory. ZeRO reduces memory usage, allowing researchers to train models like GPT-3 on more affordable hardware setups.

- Advanced Data Parallelism:

- What it does: DeepSpeed supports advanced data parallelism techniques that distribute the workload across multiple GPUs, increasing training speed.

- Real-life example: A company working on a new AI-driven recommendation system for e-commerce can leverage DeepSpeed to train their models faster, thus reducing the time to market and staying ahead of competitors.

- Mixed Precision Training:

- What it does: This technique reduces the precision of calculations where full precision is not necessary, speeding up training without sacrificing accuracy.

- Real-life example: In image recognition tasks, a healthcare startup developing AI for early cancer detection can use mixed precision training to process large datasets quickly, allowing them to iterate and improve their models faster.

Real-World Applications of DeepSpeed

NLP (Natural Language Processing)

DeepSpeed has been instrumental in advancing NLP by enabling the training of large-scale language models. For instance, OpenAI’s GPT-3, one of the most sophisticated language models, benefits from techniques similar to those provided by DeepSpeed. This has led to breakthroughs in applications like automated content creation, sentiment analysis, and real-time translation.

Autonomous Vehicles

Companies developing self-driving technology rely heavily on AI models that require vast amounts of data and computation. DeepSpeed helps in training these models more efficiently, accelerating the development of safer and more reliable autonomous vehicles.

Scientific Research

In fields like genomics and climate modeling, researchers deal with incredibly complex data and require high computational power. DeepSpeed allows scientists to run their simulations and analyses faster, leading to quicker discoveries and innovations.

How to Get Started with DeepSpeed

If you’re excited to try DeepSpeed, here’s a quick guide to getting started:

- Installation: You can install DeepSpeed via pip

- Integration: Integrate DeepSpeed with your existing PyTorch projects. The DeepSpeed documentation provides detailed instructions and examples.

- Experimentation: Start experimenting with DeepSpeed’s features like ZeRO, advanced data parallelism, and mixed precision training to see how they can optimize your model training process.

Conclusion

DeepSpeed is revolutionizing the way we approach deep learning, making it accessible to more researchers and developers. Its advanced optimization techniques allow for the efficient training of large models, enabling breakthroughs in various AI applications. Whether you’re working on NLP, autonomous vehicles, or scientific research, DeepSpeed can significantly enhance your project’s performance and scalability.

By incorporating DeepSpeed into your AI workflow, you not only save time and resources but also open up new possibilities for innovation. So, why not give it a try and see how it can supercharge your deep learning endeavors?

FAQs about DeepSpeed

1. What is DeepSpeed used for?

DeepSpeed is an open-source deep learning optimization library developed by Microsoft, designed to scale up the training of large-scale AI models. It enhances memory efficiency, training speed, and reduces costs, making it feasible to train massive models without extensive hardware requirements.

2. What is the difference between CUDA and DeepSpeed?

CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by NVIDIA. It allows developers to use NVIDIA GPUs for general-purpose processing. DeepSpeed, on the other hand, is a library that optimizes deep learning model training, often leveraging CUDA for GPU acceleration but providing additional optimizations like memory management and parallelism.

3. What is the difference between FSDP and DeepSpeed?

FSDP (Fully Sharded Data Parallel) and DeepSpeed both aim to optimize model training. FSDP focuses on sharding all model states (optimizer states, gradients, parameters) across multiple devices to reduce memory usage. DeepSpeed incorporates similar sharding strategies (e.g., ZeRO) but also includes additional features like mixed precision training, advanced data parallelism, and memory optimizations, making it a more comprehensive solution.

4. What is the difference between DeepSpeed Stage 2 and 3?

DeepSpeed’s ZeRO (Zero Redundancy Optimizer) has multiple stages:

- Stage 2: Optimizes memory by partitioning optimizer states and gradients across data-parallel processes.

- Stage 3: Further enhances memory efficiency by partitioning model states, including parameters, gradients, and optimizer states, making it possible to train even larger models.

5. Who created DeepSpeed?

DeepSpeed was created by Microsoft Research. It is part of Microsoft’s broader efforts to advance AI research and provide tools that make AI model training more efficient and accessible.

6. What is MPU in DeepSpeed?

MPU (Model Parallelism Unit) in DeepSpeed is used for model parallelism, where a single model is split across multiple devices to enable the training of larger models that do not fit into the memory of a single device.

7. What is ZeRO in DeepSpeed?

ZeRO (Zero Redundancy Optimizer) is an optimization technique in DeepSpeed that reduces memory redundancy and enables the efficient training of very large models. It partitions model states across devices to minimize memory usage and maximize scalability.

8. What is ZeRO optimizer?

The ZeRO optimizer is part of the ZeRO optimization framework in DeepSpeed. It specifically addresses memory inefficiencies in training large-scale models by distributing optimizer states and gradients across multiple devices, thus reducing memory footprint and enabling larger models to be trained.

9. Is FSDP faster than DDP?

FSDP (Fully Sharded Data Parallel) can be faster than DDP (Distributed Data Parallel) in scenarios where memory efficiency is critical, as it reduces memory usage by sharding model states. However, the actual speed gain depends on the specific model and hardware configuration. DDP is simpler and might be faster for smaller models that fit within the memory of a single device.

10. What is DeepSpeed in LLM?

In the context of LLM (Large Language Models), DeepSpeed provides the optimizations needed to train and deploy these models efficiently. It helps manage the significant computational and memory requirements of LLMs, enabling researchers and developers to train models like GPT-3 more affordably and quickly.

1 Pingback