Table of Contents

Introduction – DenseAV from MIT

Imagine this: you’re an infant, babbling away at your parents. You have no idea what the words mean, but by watching their faces, their actions, and the way they respond to your sounds, you slowly start to decipher the world. This is kind of how DenseAV, a new algorithm from MIT, is learning language!

DenseAV: Unveiling Language from Videos

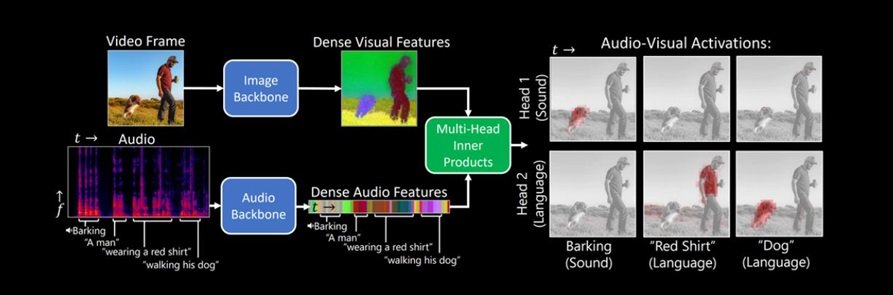

Developed by a team at MIT, Dense Audio-Visual (DenseAV) is an AI model that’s breaking ground in language acquisition. Unlike traditional AI, which relies on mountains of labeled data (text and corresponding meanings), DenseAV learns by watching videos of people talking.

Think about it – how often do we learn a new word by simply hearing it and seeing what it refers to? For instance, a child might hear “apple” while their parent points to the fruit on the table. DenseAV uses a similar approach, but on a much larger scale. By sifting through countless videos, it starts to associate sounds with visuals, piecing together the meaning of words and phrases.

Here’s the Cool Part: How Does DenseAV Work?

The secret sauce behind DenseAV is a technique called contrastive learning. Imagine showing your child two toys – a red car and a green teddy bear. They’ll likely focus on the difference in color (red vs. green) rather than the overall shapes (car vs. bear). DenseAV works similarly. It analyzes video pairs, figuring out which audio and visual cues consistently appear together and which don’t.

For example, it might compare a video of someone saying “cup” while holding a mug to a video with someone talking about something else but not holding a mug. By contrasting these examples, DenseAV refines its understanding of the word “cup” and its relation to the visual object.

Real-World Applications: A Glimpse into the Future

DenseAV’s ability to learn language naturally has exciting possibilities. Here are a few areas where it could make a big difference:

- Multimedia Search: Imagine searching for a specific scene in a movie by simply describing it with words. DenseAV could bridge the gap between audio and video, making searches more intuitive.

- Language Learning: Learning a new language can be daunting. DenseAV could personalize learning by analyzing videos tailored to the user’s interests and learning pace.

- Robotics: Robots that can understand spoken commands would be a game-changer. DenseAV could equip them with the ability to interpret not just the words themselves, but also the context and emotions behind them.

DenseAV from MIT is still under development, but it represents a significant leap in AI’s ability to grasp language. By mimicking how humans learn, it paves the way for more natural and interactive AI experiences in the future.

Want to learn more about DenseAV from MIT? Check out these resources:

MIT News: New algorithm discovers language just by watching videos https://news.mit.edu/2024/denseav-algorithm-discovers-language-just-watching-videos-0611

1 Pingback